Directly answering the questions, yes, the use of AI, specially when abused, is absurdly prejudicial to students and might put teachers in uncomfortable situations (more on that later). The whole point of this article is however, to expand on a point that I, personally, haven’t seen being mentioned whenever students abusing AI is brought up.

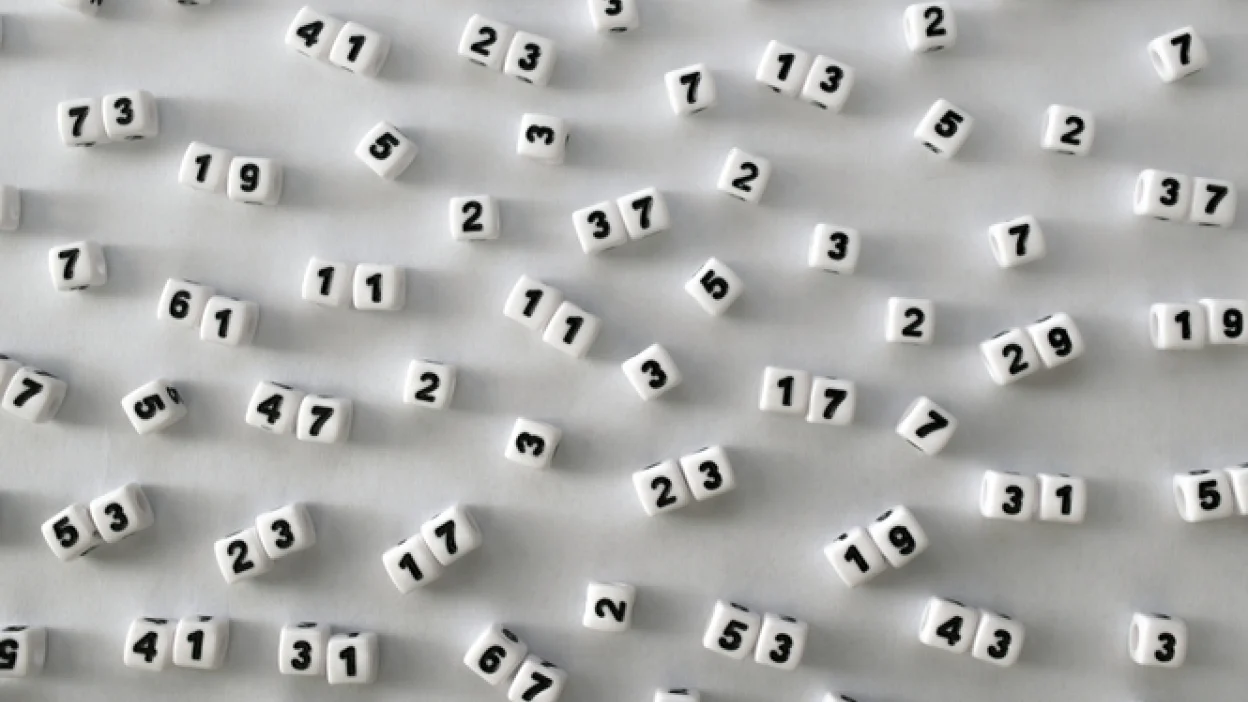

Recently, results from a research about the amount of times the word ‘delve’ has been used in papers, showing a massive increase in the usage of the word. The word itself means to ‘dive’ into something, normally used as diving into topics, for example, in this article, I am delving into the consequences and spread of AI abuse. I am not sure if you are able to see, but the specific year when the lines spiked were 2022 – 2024, right when chat-GPT was popularized and AI started growing. Now, it is not proven, however, many people believe that this is due to ChatGPT abuse (yes, even in professional papers. Now, unfortunately the only explanation I found on the internet to that was on Twitter (thanks Alexandre de Moraes) and I had to come up with something so I asked chatGPT himself. At first he agreed to the fact that delve is a word he commonly uses in texts, some of the reasons he gave me are that delve was a word that showed up a lot on his training data, context suitability (formality), linguistic patterns and lack of hardcoding, which both mean that chat GPT doesn’t have any phrases hardcoded into it, so it looks for patterns that it can repeat constantly to be more efficient which lead to him utilizing the word delve a lot.

Now, there are two big questions with this use, was it used exclusively to enhance vocabulary? And what if researchers develop a dependency on chatGPT to write out their ideas? Unfortunately there is no answer to the first questions, it relies on the honesty of each research, which is more than expected for a professional and it might lead to chatGPT misinterpreting data orr even coming up with wrong stuff. For the second question, personally, I encourage everyone to look more into the topic and develop their own opinion, however, I believe that excessive use of AI in children’s learning can hinder the development of neuroplasticity by reducing the mental effort necessary for deep learning.

Daniel Willingham, a prominent educational psychologist, argues that real learning requires active thinking and cognitive challenge, which AI-driven tools often bypass by providing quick solutions without engaging critical thinking or problem-solving skills. Since neuroplasticity is strengthened by the brain’s effort to form and reinforce new connections through repeated cognitive engagement, relying too much on AI risks weakening this process. Children may become passive consumers of information, missing the opportunity to deeply process and internalise knowledge, which is essential for long-term learning and brain development.

However, The point of this article is not to say that AI is not a problem in schools but in the whole world, no, the use of AI is specially prejudicial in school as they can lead to people submitting projects that are not theirs to the IB for example (complaint from my portuguese teacher) and it can prevent students from developing important research, analytical and writing skills.

Overall, I believe that attention needed to be called to the use of AI in professional researches and it cannot be overlooked to prevent any possible misinformation due to the usage of AI.